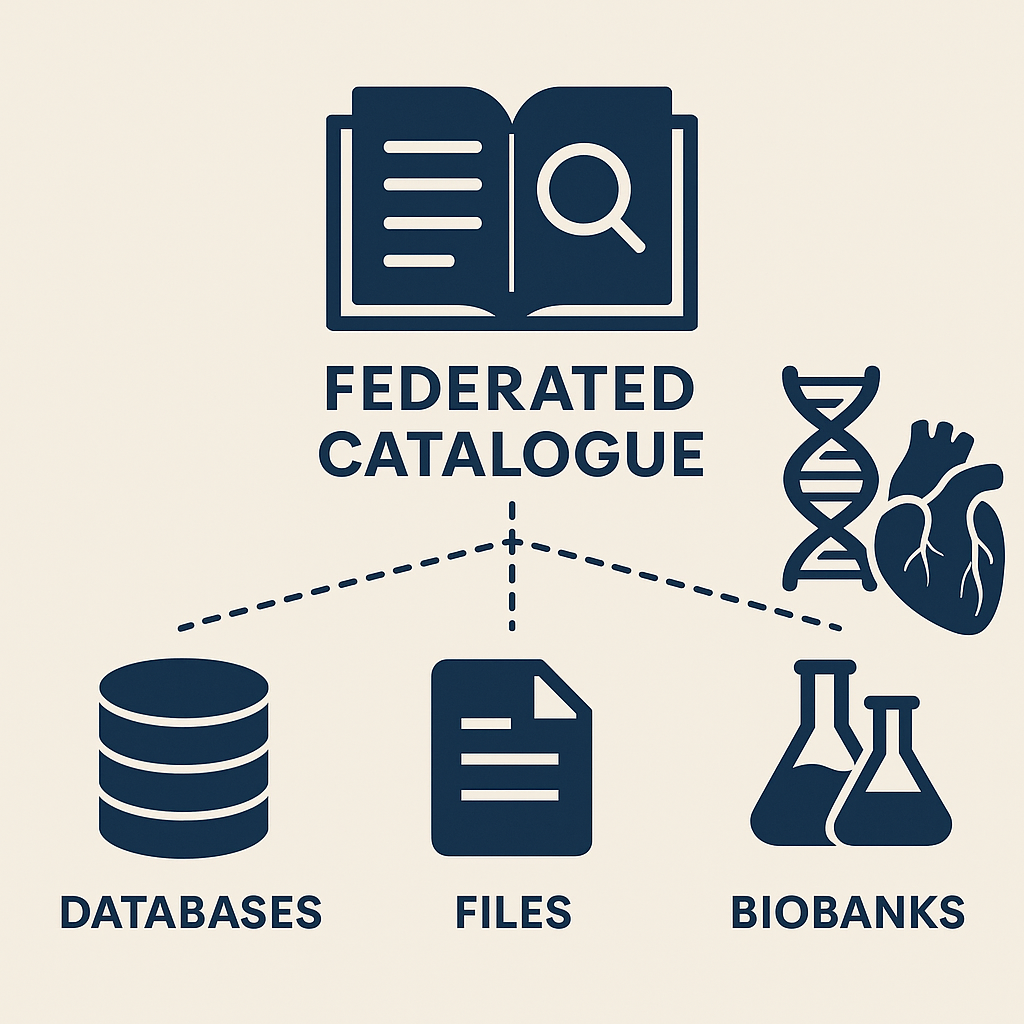

NextGen’s Federated Catalogue

NextGen aims to develop tools for advance diagnostics, prediction and personalised medicine for cardiovascular disease, using sensitive data and information located at multiple sites. The sensitive nature of these data requires tools that search for multimodal datasets without letting the native information leave the site where it is stored and managed.

NextGen’s federated catalogue is a decentralized discovery interface that structures descriptive information, such as meta-data, of data assets from multiple distributed, autonomous sources. This unified interface, a core component of its data space architecture, allows users to find and process data that remains under local control at each source, without requiring any centralised aggregation.

The data in our project is multimodal data associated with cardiovascular disease and cardiovascular patients, the sources are different global biobanks managing complex, multimodal, and multiomic datasets that are crucial to the NextGen research and experimentation.

NextGen’s federated catalogue allows each institution owning the data to retain full control over them, since the federated nature is based on the exposure of metadata and semantic descriptions using advanced data characterisation tools, avoiding the transfer of raw data completely. These tools abstract meaning from specific data formats, enabling the catalogue as the common interface between different repositories, preserving the integrity of sensitive genomic and clinical information.

The federated catalogue is a tool that enables search-and-match operations across databanks, supports cross-site cohort matching, and allows researchers to identify datasets suitable for their specific clinical or research questions and embeds consent and governance requirements compliant with the regulatory framework.

The catalogue is functionally integrated with:

- Multimodal Integration Objects (MMIOs), which link metadata, schemas, policies, and data transformation events, and serve as the vehicle for making datasets AI/ML-ready.

- Decentralised authentication mechanisms, ensuring data access is verifiable, governed, and aligned with privacy-preserving principles.

- Semantic interoperability tools, which allow the catalogue to handle datasets encoded in various standards (FHIR, OMOP, CDISC, etc.).

- A governance-aware architecture, including content-based identifiers and consent-aware filtering, ensuring lawful and ethical reuse of data.

The federated catalogue thus becomes more than a navigation tool—it is a bridge between data providers and AI-enabled research pipelines, advancing scientific collaboration while preserving autonomy, integrity, and trust.